Page 93 - MSAM-3-4

P. 93

Materials Science in Additive Manufacturing Super-resolution method for L-PBF

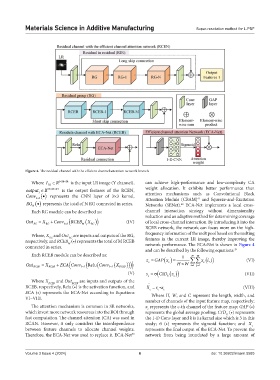

Figure 4. The residual channel with the efficient channel attention network branch

Where I R 12424 is the input LR image (Y channel), can achieve high-performance and low-complexity CA

LR

output R 64 24 24 is the output features of the RCEN, weight allocation. It exhibits better performance than

1

Conv represents the CNN layer of 3×3 kernel, attention mechanisms such as Convolutional Block

33

Attention Module (CBAM) and Squeeze-and-Excitation

41

RG represents the total of N RG connected in series. Networks (SENet). ECA-Net implements a local cross-

42

N

Each RG module can be described as: channel interaction strategy without dimensionality

reduction and an adaptive method for determining coverage

Out RG X RG Conv RCEB X (IV) of local cross-channel interaction. By introducing it into the

M

RG

33

RCEB network, the network can focus more on the high-

Where, X and Out are inputs and outputs of the RG, frequency information of the melt pool based on the melting

RG

RG

respectively, and RCEB (•) represents the total of M RCEB features in the current LR image, thereby improving the

M

connected in series. network performance. The ECA-Net is shown in Figure 4

and can be described by the following equations: 39

Each RCEB module can be described as: 1 H W xi j

(

c

c

Out RCEB = X RCEB ECA Conv 33× + ( Relu (Conv 33× ( X RCEB )))) z GAP x HW i1 c , (VI)

j1

C Dz

(V) s 1 k c (VII)

c

Where X RCEB and Out RCEB are inputs and outputs of the

∼

RCEB, respectively, Relu (•) is the activation function, and X = s xi c (VIII)

c

c

ECA (•) represents the ECA-Net according to Equations Where H, W, and C represent the length, width, and

VI–VIII.

number of channels of the input feature map, respectively;

The attention mechanism is common in SR networks, x represents the c-th channel of the feature map; GAP (•)

c

which invest more network resources into the ROI through represents the global average pooling; C1D (•) represents

K

fast computation. The channel attention (CA) was used in the 1-D Conv layer and k is its kernel size which is 3 in this

RCAN. However, it only considers the interdependence study; σ (•) represents the sigmoid function; and X

c

between feature channels to allocate channel weights. represents the final output of the ECA-Net. To prevent the

Therefore, the ECA-Net was used to replace it. ECA-Net network from being inundated by a large amount of

39

Volume 3 Issue 4 (2024) 6 doi: 10.36922/msam.5585