Page 94 - MSAM-3-4

P. 94

Materials Science in Additive Manufacturing Super-resolution method for L-PBF

information during training, ECA-Net achieves cross- The SR reconstruction of melt pool images requires the

channel information collection through 1-D convolution network to extract key melting information from LR images

and generates weights from feature vectors through the and transform it into high-dimensional space. Natural

sigmoid function, explicitly modeling the dependency images contain complex backgrounds and multiple types

relationships between channels. By reassembling of objects. To reduce the hardware requirements, they

43

messages through weights in channels, the key information are typically discretized into different pieces as input for

in the melt pool image is emphasized. This is particularly network training, which may destroy their original semantic

sensitive for high-frequency features representing regions information. In contrast, the melt pool images typically

with high brightness gradients (the boundary of the melt contain a bright and very small melt pool. Therefore, treating

pool). Compared to the black background and high the melt pool as a complete object for network learning can

brightness melt pool area, the information of drastic better preserve its features. This requires the network to have

changes in brightness gradient is one of the key features a larger receptive field and multi-scale feature extraction

that can be focused on. Through adaptive reassignment capability, to learn the overall morphological features of the

strategies using attention mechanisms, this key information melt pool. It can guide LR images reconstruction based on

expression can be strengthened. the overall characteristics of the melt pool instead of relying

solely on the local brightness information of the image.

2.4. U-Net branch for multi-level feature fusion

U-Net is used as another feature extraction branch to achieve

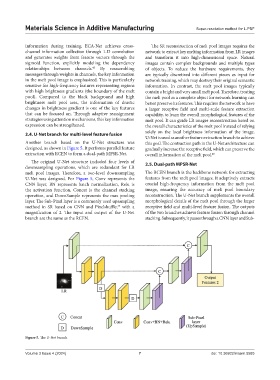

Another branch based on the U-Net structure was this goal. The contraction path in the U-Net architecture can

designed, as shown in Figure 5. It performs parallel feature gradually increase the receptive field, which can preserve the

extraction with RCEN to form a dual-path MPSR-Net. overall information of the melt pool. 45

The original U-Net structure included four levels of 2.5. Dual-path MPSR-Net

downsampling operations, which are redundant for LR

melt pool images. Therefore, a two-level downsampling The RCEN branch is the backbone network for extracting

U-Net was designed. For Figure 5, Conv represents the features from the melt pool images. It adaptively extracts

CNN layer, BN represents batch normalization, Relu is crucial high-frequency information from the melt pool

the activation function, Concat is the channel stacking image, ensuring the accuracy of melt pool boundary

operation, and DownSample represents the max pooling reconstruction. The U-Net branch supplements the overall

layer. The Sub-Pixel layer is a commonly used upsampling morphological details of the melt pool through the larger

method in SR based on CNN and Pixelshuffle, with a receptive field and multi-level feature fusion. The outputs

44

magnification of 2. The input and output of the U-Net of the two branches achieve feature fusion through channel

branch are the same as the RCEN. stacking. Subsequently, it passes through a CNN layer and Sub-

Figure 5. The U-Net branch

Volume 3 Issue 4 (2024) 7 doi: 10.36922/msam.5585