Page 87 - AIH-2-4

P. 87

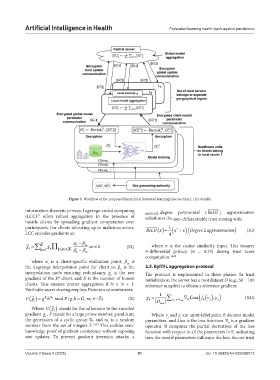

Artificial Intelligence in Health Federated learning health stack against pandemics

Figure 3. Workflow of the proposed hierarchical federated learning (See Section 2.1 for details)

information-theoretic privacy. Lagrange-coded computing second- degree polynomial ( ReLU ) approximation

(LCC) offers robust aggregation in the presence of substitutes the non-differentiable trust scoring with:

27

hostile clients by spreading gradient computation over

1

participants. For clients tolerating up to malicious actors, ReLU x x Degreeapproximation (XI)

x

2

2

LCC encodes gradients as: 4

i ∑

where x is the cosine similarity input. This ensures

g = K 1 g k∏ 1 mK α β i β − β − m ,mk (IX) ∊-differential privacy (∊ = 0.25) during trust score

≠

≤≤

k=

m

k

computation. 14,22

where α is a client-specific evaluation point, β is

i

m

the Lagrange interpolation point for client m, β is the 2.5. ByITFL aggregation protocol

k

interpolation node ensuring redundancy, g is the raw The protocol is implemented in three phases. In trust

k

gradient of the k client, and K is the number of honest initialization, the server has a root dataset D (e.g., 50 – 100

th

clients. This ensures proper aggregation if N ≥ 3t + 1. reference samples) to obtain a reference gradient:

Verifiable secret sharing employs Pedersen commitments:

fv

Cg gh ra i modP gh(, G , ra )Z (X) g D 1 root v j D root oss j y , j (XII)

g i

0

i

i

Where Cg stands for the adherence to the encoded

i

gradient g , P stands for a large prime number, g and h are Where v and y are input-label pairs, θ denotes model

i

j

j

the generators of a cyclic group , and ra is a random parameters, and Loss is the loss function. ∇ is a gradient

i

θ

number from the set of integers ℤ. 14,22 This enables zero- operator. It computes the partial derivatives of the loss

knowledge proof of gradient consistency without exposing function with respect to all the parameters in θ, indicating

raw updates. To prevent gradient inversion attacks, a how the model parameters influence the loss. Secure trust

Volume 2 Issue 4 (2025) 81 doi: 10.36922/AIH025080013