Page 320 - IJB-8-4

P. 320

Deep learning for EBB control

A B

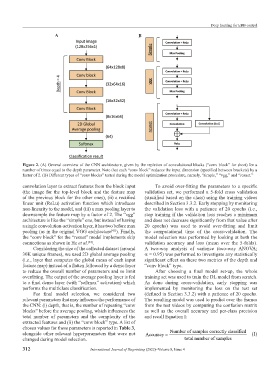

Figure 2. (A) General overview of the CNN architecture, given by the repletion of convolutional blocks (“conv block” for short) for a

number of times equal to the depth parameter. Note that each “conv block” reduces the input dimension (specified between brackets) by a

factor of 2. (B) Different types of “conv blocks” tested during the model optimization procedure, namely, “simple,” “vgg,” and “resnet.”

convolution layer to extract features from the block input To avoid over-fitting the parameters to a specific

(the image for the top-level block and the feature map validation set, we performed a 5-fold cross validation

of the previous block for the other ones), (ii) a rectified (stratified based on the class) using the training videos

linear unit (ReLu) activation function which introduces described in Section 3.3.2. Early stopping by monitoring

non-linearity to the model, and (iii) a max pooling layer to the validation loss with a patience of 20 epochs (i.e.,

downsample the feature map by a factor of 2. The “vgg” stop training if the validation loss reaches a minimum

architecture is like the “simple” one, but instead of having and does not decrease significantly from that value after

a single convolution-activation layer, it has two before max 20 epochs) was used to avoid over-fitting and limit

pooling (as in the original VGG architecture ). Finally, the computational time of the cross-validation. The

[43]

the “conv block” for the “resnet” model implements skip model selection was performed by looking at both the

connections as shown in He et al. . validation accuracy and loss (mean over the 5-folds).

[44]

Considering the size of the collected dataset (around A two-way analysis of variance (two-way ANOVA;

30K unique frames), we used 2D global average pooling α = 0.95) was performed to investigate any statistically

(i.e., layer that computes the global mean of each input significant effect on these two metrics of the depth and

feature map) instead of a flatten followed by a dense layer “conv block” type.

to reduce the overall number of parameters and so limit After choosing a final model set-up, the whole

overfitting. The output of the average pooling layer is fed training set was used to train the DL model from scratch.

to a final dense layer (with “softmax” activation) which As done during cross-validation, early stopping was

performs the multiclass classification. implemented by monitoring the loss on the test set

For final model selection, we considered two (defined in Section 3.3.2) with a patience of 20 epochs.

relevant parameters that may influence the performance of The resulting model was used to predict over the frames

the CNN: (i) depth, that is, the number of repeating “conv from the test videos by computing the confusion matrix

blocks” before the average pooling, which influences the as well as the overall accuracy and per-class precision

total number of parameters and the complexity of the and recall Equation I:

extracted features and (ii) the “conv block” type. A list of

chosen values for these parameters is reported in Table 3,

alongside other relevant hyperparameters that were not Accuracy = Number of samples correctly classified (I)

changed during model selection. total number of samples

312 International Journal of Bioprinting (2022)–Volume 8, Issue 4