Page 42 - AIH-1-4

P. 42

Artificial Intelligence in Health Segmentation and classification of DR using CNN

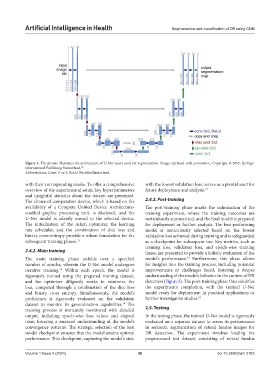

Figure 4. The picture illustrates the architecture of U-Net layers used for segmentation. Image reprinted with permission, Copyright © 2015, Springer

International Publishing Switzerland. 38

Abbreviations: Conv: 3 ×x 3; ReLU: Rectified linear unit.

with their corresponding masks. To offer a comprehensive with the lowest validation loss, serves as a pivotal asset for

overview of the experimental setup, key hyperparameters future deployment and analysis. 33

and insightful statistics about the dataset are presented.

The choice of computation device, which is based on the 2.4.3. Post-training

availability of a Compute Unified Device Architecture- The post-training phase marks the culmination of the

enabled graphic processing unit, is disclosed, and the training experiment, where the training outcomes are

U-Net model is adeptly moved to the selected device. meticulously summarized, and the final model is prepared

The initialization of the Adam optimizer, the learning for deployment or further analysis. The best-performing

rate scheduler, and the combination of dice loss and model is meticulously selected based on the lowest

binary cross-entropy provide a robust foundation for the validation loss achieved during training and is safeguarded

subsequent training phases. 31 as a checkpoint for subsequent use. Key metrics, such as

training loss, validation loss, and epoch-wise training

2.4.2. Main training times, are presented to provide a holistic evaluation of the

The main training phase unfolds over a specified model’s performance. Furthermore, this phase allows

18

number of epochs, wherein the U-Net model undergoes for insights into the training process, including potential

iterative training. Within each epoch, the model is improvements or challenges faced, fostering a deeper

33

rigorously trained using the prepared training dataset, understanding of the model’s behavior in the context of DR

and the optimizer diligently works to minimize the detection (Figure 5). The post-training phase thus solidifies

loss, computed through a combination of the dice loss the experiment’s completion, with the trained U-Net

and binary cross entropy. Simultaneously, the model’s model ready for deployment in practical applications or

proficiency is rigorously evaluated on the validation further investigative studies. 34

dataset to monitor its generalization capabilities. The

18

training process is intricately monitored with detailed 2.5. Testing

output, including epoch-wise loss values and elapsed In the testing phase, the trained U-Net model is rigorously

time, fostering a nuanced understanding of the model’s evaluated on a separate dataset to assess its performance

convergence patterns. The strategic selection of the best in semantic segmentation of retinal fundus images for

model checkpoint ensures that the model attains optimal DR detection. The experiment involves loading the

performance. This checkpoint, capturing the model’s state preprocessed test dataset, consisting of retinal fundus

Volume 1 Issue 4 (2024) 36 doi:10.36922/aih.2783