Page 349 - IJB-9-4

P. 349

International Journal of Bioprinting Bioprinting with machine learning

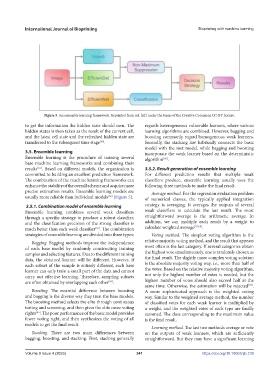

Figure 5. An ensemble learning framework. Reprinted from ref. [62] under the terms of the Creative Commons CC-BY license.

to get the information the hidden state should own. The regards heterogeneous vulnerable learners, where various

hidden status is then taken as the result of the current cell, learning algorithms are combined. However, bagging and

and the latest cell state and the refreshed hidden state are boosting commonly regard homogeneous weak learners.

transferred to the subsequent time stage . Secondly, the stacking law habitually connects the basic

[60]

model with the met model, while bagging and boosting

3.5. Ensemble learning incorporate the weak learner based on the deterministic

Ensemble learning is the procedure of training several algorithm .

[66]

base machine learning frameworks and combining their

results . Based on different models, the organization is 3.5.2. Result generation of ensemble learning

[61]

committed to building an excellent prediction framework. For different prediction results that multiple weak

The combination of the machine learning frameworks can classifiers produce, ensemble learning usually uses the

enhance the stability of the overall scheme and acquire more following three methods to make the final result.

precise estimation results. Ensemble learning models are Average method. For the regression evaluation problem

usually more reliable than individual models (Figure 5). of numerical classes, the typically applied integration

[62]

3.5.1. Combination model of ensemble learning strategy is averaging. It averages the outputs of several

Ensemble learning combines several weak classifiers weak classifiers to calculate the last result. The most

through a specific strategy to produce a robust classifier, straightforward average is the arithmetic average. In

and the classification precision of the strong classifier is addition, we can multiply each result by a weight to

much better than each weak classifier . The combination calculate weighted average [67,68] .

[63]

strategies of ensemble learning are divided into three types: Voting method. The simplest voting algorithm is the

Bagging. Bagging methods improve the independence relative majority voting method, and the result that appears

of each base model by randomly constructing training most often is the last category. If several categories obtain

samples and selecting features. Due to the different training the highest vote simultaneously, one is randomly chosen as

data, the obtained learner will be different. However, if the final result. The slightly more complex voting solution

each subset of the sample is entirely different, each base is the absolute majority voting way, i.e., more than half of

learner can only train a small part of the data and cannot the votes. Based on the relative majority voting algorithm,

carry out effective learning. Therefore, sampling subsets not only the highest number of votes is needed, but the

are often obtained by overlapping each other . highest number of votes should also exceed half at the

[64]

same time. Otherwise, the estimation will be rejected .

[69]

Boosting. The essential difference between boosting A more sophisticated approach is the weighted voting

and bagging is the diverse way they treat the base models. way. Similar to the weighted average method, the number

The boosting method selects the elite through continuous of classified votes for each weak learner is multiplied by

testing and screening, and then gives the elite more voting a weight, and the weighted votes of each type are finally

rights . The poor performance of the basic model provides summed. The class corresponding to the maximum value

[65]

fewer voting right, and then synthesizes the voting of all is the final result.

models to get the final result.

Learning method. The last two methods average or vote

Stacking. There are two main differences between on the outputs of weak learners, which are sufficiently

bagging, boosting, and stacking. First, stacking generally straightforward. But they may have a significant learning

Volume 9 Issue 4 (2023) 341 https://doi.org/10.18063/ijb.739