Page 348 - IJB-9-4

P. 348

International Journal of Bioprinting Bioprinting with machine learning

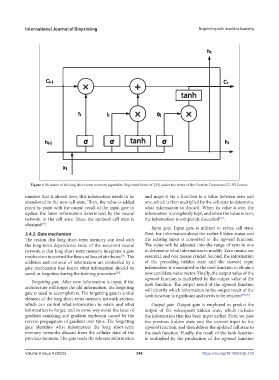

Figure 4. Structure of the long short-term memory algorithm. Reprinted from ref. [52] under the terms of the Creative Commons CC-BY license.

number that is almost zero, this information needs to be and maps it via a function to a value between zero and

abandoned in the new cell state. Then, the value is added one, which is then multiplied by the cell state to determine

point by point with the output result of the input gate to what information to discard. When its value is one, the

update the latest information determined by the neural information is completely kept, and when the value is zero,

network to the cell state. Thus, the updated cell state is the information is completely discarded .

[57]

obtained .

[54]

Input gate. Input gate is utilized to revise cell state.

3.4.2. Gate mechanism First, the information about the earlier hidden status and

The reason that long short-term memory can deal with the existing input is converted to the sigmoid function.

the long-term dependence issue of the recurrent neural The value will be adjusted into the range of zero to one

network is that long short-term memory integrates a gate to determine what information to modify. Zero means not

mechanism to control the flow and loss of attributes . The essential, and one means crucial. Second, the information

[55]

addition and removal of information are controlled by a of the preceding hidden state and the current input

gate mechanism that learns what information should be information is transmitted to the tanh function to obtain a

saved or forgotten during the training procedure . new candidate value vector. Finally, the output value of the

[56]

sigmoid function is multiplied by the output value of the

Forgetting gate. After new information is input, if the

architecture will forget the old information, the forgetting tanh function. The output result of the sigmoid function

will identify which information in the output result of the

gate is used to accomplish it. The forgetting gate is a vital tanh function is significant and needs to be retained [58,59] .

element of the long short-term memory network section,

which can control what information to retain and what Output gate. Output gate is employed to predict the

information to forget, and in some way avoid the issue of output of the subsequent hidden state, which includes

gradient vanishing and gradient explosion caused by the the information that has been input earlier. First, we pass

reverse propagation of gradient over time. The forgetting the previous hidden state and the current input to the

gate identifies what information the long short-term sigmoid function, and then deliver the updated cell state to

memory networks discard from the cellular state of the the tanh function. Finally, the result of the tanh function

previous moment. The gate reads the relevant information is multiplied by the production of the sigmoid function

Volume 9 Issue 4 (2023) 340 https://doi.org/10.18063/ijb.739