Page 64 - MSAM-4-3

P. 64

Materials Science in Additive Manufacturing Bead geometry prediction in laser-arc AM

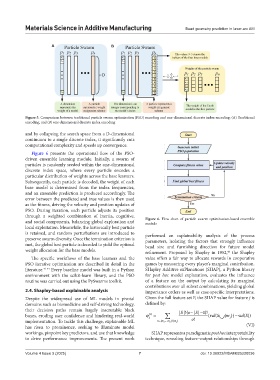

A B

Figure 5. Comparison between traditional particle swarm optimization (PSO) encoding and one-dimensional discrete index encoding: (A) Traditional

encoding, and (B) one-dimensional discrete index encoding

and by collapsing the search space from a D-dimensional

continuum to a single discrete index, it significantly cuts

computational complexity and speeds up convergence.

Figure 6 presents the operational flow of the PSO-

driven ensemble learning module. Initially, a swarm of

particles is randomly seeded within the one-dimensional

discrete index space, where every particle encodes a

particular distribution of weights across the base learners.

Subsequently, each particle is decoded, the weight of each

base model is determined from the index frequencies,

and an ensemble prediction is produced accordingly. The

error between the predicted and true values is then used

as the fitness, driving the velocity and position updates of

PSO. During iteration, each particle adjusts its position

through a weighted combination of inertia, cognitive,

and social components, balancing global exploration and Figure 6. Flow chart of particle swarm optimization-based ensemble

module

local exploitation. Meanwhile, the historically best particle

is retained, and random perturbations are introduced to performed an explainability analysis of the process

preserve swarm diversity. Once the termination criterion is parameters, isolating the factors that strongly influence

met, the global best particle is decoded to yield the optimal bead size and furnishing direction for future model

weight allocation for the base models. refinement. Proposed by Shapley in 1952, the Shapley

45

The specific workflows of the base learners and the value offers a fair way to allocate rewards in cooperative

PSO iterative optimization are described in detail in the games by measuring every player’s marginal contribution.

literature. 41-44 Every baseline model was built in a Python SHapley Additive exPlanations (SHAP), a Python library

environment with the scikit-learn library, and the PSO for post hoc model explanation, evaluates the influence

routine was carried out using the PySwarms toolkit. of a feature on the output by calculating its marginal

contributions over all subset combinations, yielding global

2.4. Shapley-based explainable analysis importance orders as well as case-specific interpretations.

Despite the widespread use of ML models in pivotal Given the full feature set F, the SHAP value for feature j is

domains such as biomedicine and self-driving technology, defined by:

their decision paths remain largely inscrutable black S ||!( o || S 1 )!

boxes, eroding user confidence and hindering real-world j i () ( valS( { m }) val S( )))

j

implementation. To tackle this challenge, explainable ML S { m ,... m o }\{ m j } o!

1

has risen to prominence, seeking to illuminate model (VI)

workings, pinpoint key predictors, and use that knowledge SHAP represents a paradigmatic post hoc interpretability

to drive performance improvements. The present work technique, revealing feature–output relationships through

Volume 4 Issue 3 (2025) 7 doi: 10.36922/MSAM025220036